RAG Blueprint tutorial¶

Many of our users use Vespa to power large scale RAG Applications.

This blueprint aims to exemplify many of the best practices we have learned while supporting these users.

While many RAG tutorials exist, this blueprint provides a customizable template that:

- Can (auto)scale with your data size and/or query load.

- Is fast and production grade.

- Enables you to build RAG applications with state-of-the-art quality.

This tutorial will show how we can develop a high-quality RAG application with an evaluation-driven mindset, while being a resource you can revisit for making informed choices for your own use case.

We will guide you through the following steps:

- Installing dependencies

- Cloning the RAG Blueprint

- Inspecting the RAG Blueprint

- Deploying to Vespa Cloud

- Our use case

- Data modeling

- Structuring your Vespa application

- Configuring match-phase (retrieval)

- First-phase ranking

- Second-phase ranking

- (Optional) Global-phase reranking

All the accompanying code can be found in our sample app repo, but we will also clone the repo and run the code in this notebook.

Some of the python scripts from the sample app will be adapted and shown inline in this notebook instead of running them separately.

Each step will contain reasoning behind the choices and design of the blueprint, as well as pointers for customizing to your own application.

This is not a 'Deploy RAG in 5 minutes' tutorial (although you can technically do that by just running the notebook). This focus is more about providing you with the insights and tools for you to apply it to your own use case. Therefore we suggest taking your time to look at the code in the sample app, and run the described steps."

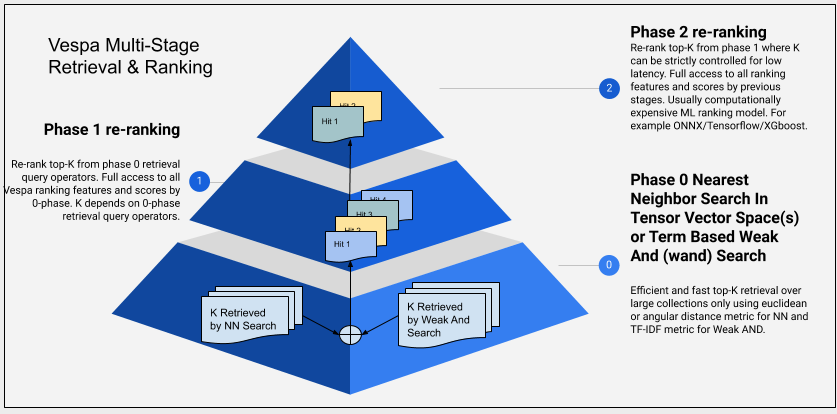

Here is an overview of the retrieval and ranking pipeline we will build in this tutorial:

Installing dependencies¶

!pip3 install pyvespa>=0.58.0 vespacli scikit-learn lightgbm pandas

zsh:1: 0.58.0 not found

Cloning the RAG Blueprint¶

Although you could define all components of the application with python code only from pyvespa, this would go against our advise on or the Advanced Configuration notebook for a guide if you want to do that.

Here, we will use pyvespa to deploy an application package from the existing files. Let us start by cloning the RAG Blueprint application from the Vespa sample-apps repository.

# Clone the RAG Blueprint sample application

!git clone --depth 1 --filter=blob:none --sparse https://github.com/vespa-engine/sample-apps.git src && cd src && git sparse-checkout set rag-blueprint

Cloning into 'src'... remote: Enumerating objects: 640, done. remote: Counting objects: 100% (640/640), done. remote: Compressing objects: 100% (350/350), done. remote: Total 640 (delta 7), reused 557 (delta 5), pack-reused 0 (from 0) Receiving objects: 100% (640/640), 62.63 KiB | 1.01 MiB/s, done. Resolving deltas: 100% (7/7), done. remote: Enumerating objects: 15, done. remote: Counting objects: 100% (15/15), done. remote: Compressing objects: 100% (13/13), done. remote: Total 15 (delta 2), reused 8 (delta 2), pack-reused 0 (from 0) Receiving objects: 100% (15/15), 92.91 KiB | 318.00 KiB/s, done. Resolving deltas: 100% (2/2), done. Updating files: 100% (15/15), done. remote: Enumerating objects: 37, done. remote: Counting objects: 100% (37/37), done. remote: Compressing objects: 100% (30/30), done. remote: Total 37 (delta 8), reused 21 (delta 6), pack-reused 0 (from 0) Receiving objects: 100% (37/37), 111.45 KiB | 401.00 KiB/s, done. Resolving deltas: 100% (8/8), done. Updating files: 100% (37/37), done.

Inspecting the RAG Blueprint¶

First, let's examine the structure of the RAG Blueprint application we just cloned:

from pathlib import Path

def tree(

root: str | Path = ".", *, show_hidden: bool = False, max_depth: int | None = None

) -> str:

"""

Return a Unix‐style 'tree' listing for *root*.

Parameters

----------

root : str | Path

Directory to walk (default: ".")

show_hidden : bool

Include dotfiles and dot-dirs? (default: False)

max_depth : int | None

Limit recursion depth; None = no limit.

Returns

-------

str

A newline-joined string identical to `tree` output.

"""

root_path = Path(root).resolve()

lines = [root_path.as_posix()]

def _walk(current: Path, prefix: str = "", depth: int = 0) -> None:

if max_depth is not None and depth >= max_depth:

return

entries = sorted(

(e for e in current.iterdir() if show_hidden or not e.name.startswith(".")),

key=lambda p: (not p.is_dir(), p.name.lower()),

)

last = len(entries) - 1

for idx, entry in enumerate(entries):

connector = "└── " if idx == last else "├── "

lines.append(f"{prefix}{connector}{entry.name}")

if entry.is_dir():

extension = " " if idx == last else "│ "

_walk(entry, prefix + extension, depth + 1)

_walk(root_path)

return "\n".join(lines)

# Let's explore the RAG Blueprint application structure

print(tree("src/rag-blueprint"))

/Users/thomas/Repos/pyvespa/docs/sphinx/source/examples/src/rag-blueprint ├── app │ ├── models │ │ └── lightgbm_model.json │ ├── schemas │ │ ├── doc │ │ │ ├── base-features.profile │ │ │ ├── collect-second-phase.profile │ │ │ ├── collect-training-data.profile │ │ │ ├── learned-linear.profile │ │ │ ├── match-only.profile │ │ │ └── second-with-gbdt.profile │ │ └── doc.sd │ ├── search │ │ └── query-profiles │ │ ├── deepresearch-with-gbdt.xml │ │ ├── deepresearch.xml │ │ ├── hybrid-with-gbdt.xml │ │ ├── hybrid.xml │ │ ├── rag-with-gbdt.xml │ │ └── rag.xml │ └── services.xml ├── dataset │ └── docs.jsonl ├── eval │ ├── output │ │ ├── Vespa-training-data_match_first_phase_20250623_133241.csv │ │ ├── Vespa-training-data_match_first_phase_20250623_133241_logreg_coefficients.txt │ │ ├── Vespa-training-data_match_rank_second_phase_20250623_135819.csv │ │ └── Vespa-training-data_match_rank_second_phase_20250623_135819_feature_importance.csv │ ├── collect_pyvespa.py │ ├── evaluate_match_phase.py │ ├── evaluate_ranking.py │ ├── pyproject.toml │ ├── README.md │ ├── resp.json │ ├── train_lightgbm.py │ └── train_logistic_regression.py ├── queries │ ├── queries.json │ └── test_queries.json ├── deploy-locally.md ├── generation.md ├── query-profiles.md ├── README.md └── relevance.md

We can see that the RAG Blueprint includes a complete application package with:

schemas/doc.sd- The document schema with chunking and embeddingsschemas/doc/*.profile- Ranking profiles for collecting training data, first-phase ranking, and second-phase rankingservices.xml- Services configuration with embedder and LLM integrationsearch/query-profiles/*.xml- Pre-configured query profiles for different use casesmodels/- Pre-trained ranking models

Deploying to Vespa Cloud¶

from vespa.deployment import VespaCloud

from vespa.application import Vespa

from pathlib import Path

import os

import json

VESPA_TENANT_NAME = "vespa-team" # Replace with your tenant name

Here, set your desired application name. (Will be created in later steps)

Note that you can not have hyphen - or underscore _ in the application name.

VESPA_APPLICATION_NAME = "rag-blueprint" # No hyphens or underscores allowed

VESPA_SCHEMA_NAME = "doc" # RAG Blueprint uses 'doc' schema

repo_root = Path("src/rag-blueprint")

application_root = repo_root / "app"

Note, you could also enable a token endpoint, for easier connection after deployment, see Authenticating to Vespa Cloud for details. We will stick to the default MTLS key/cert authentication for this notebook.

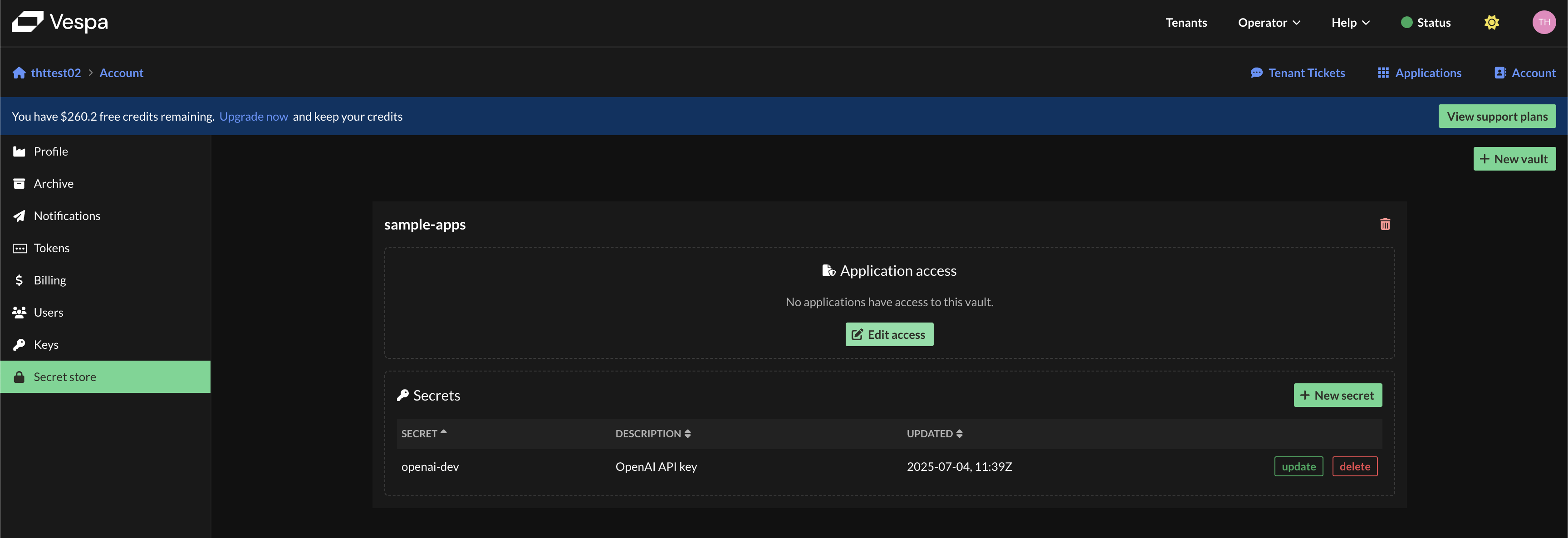

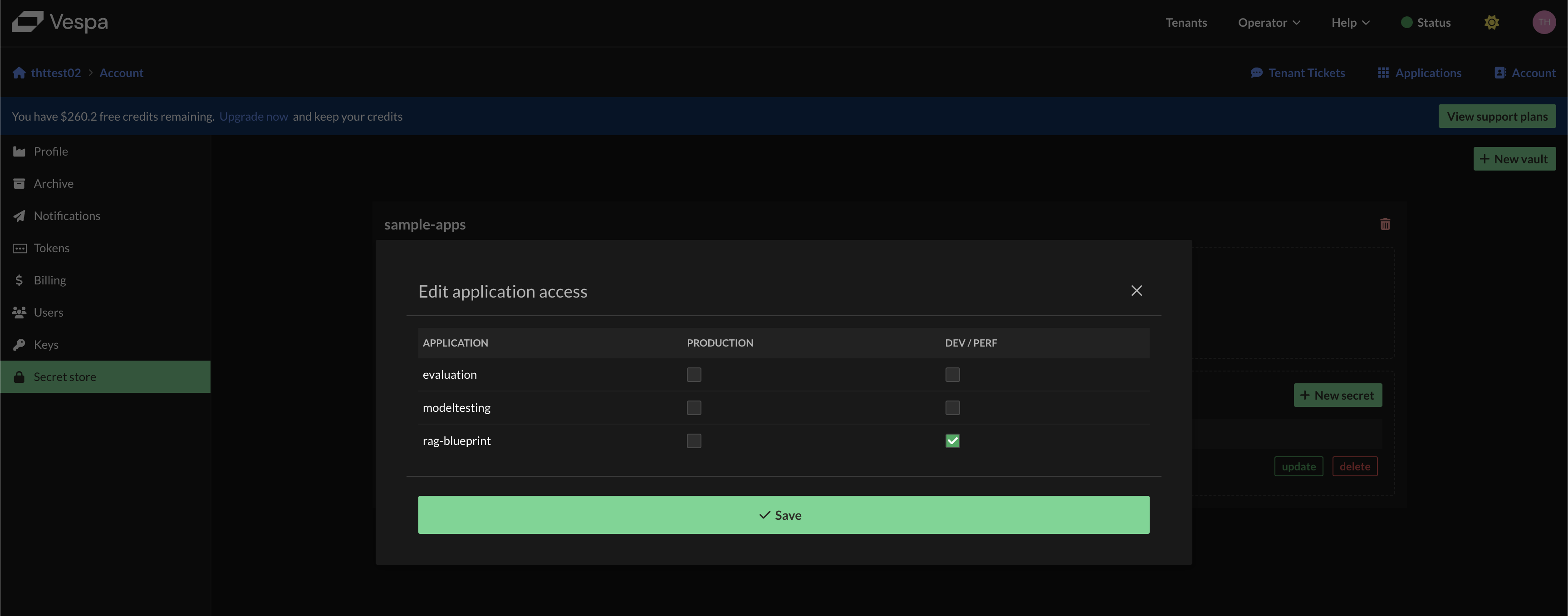

Adding secret to Vespa Cloud Secret Store¶

In order to use the LLM integration, you need to add your OpenAI API key to the Vespa Cloud Secret Store.

Then, we can reference this secret in our services.xml file, so that Vespa can use it to access the OpenAI API.

Below we have added a vault called sample-apps and a secret named openai-dev that contains the OpenAI API key.

We also need to assign permissions for our application to access this secret, but this can not be done until the application is deployed.

<!-- Uncomment this to use secret from Vespa Cloud Secret Store -->

<secrets>

<openai-api-key vault="sample-apps" name="openai-dev" />

</secrets>

Let us first take a look at the original services.xml file, which contains the configuration for the Vespa application services, including the LLM integration and embedder.

!!! note It is also possible to define the services.xml-configuration in python code, see Advanced Configuration.

from IPython.display import display, Markdown

def display_md(text: str, tag: str = "txt"):

text = text.rstrip()

md = f"""```{tag}

{text}

```"""

display(Markdown(md))

services_content = (application_root / "services.xml").read_text()

display_md(services_content, "xml")

<?xml version="1.0" encoding="utf-8"?>

<!-- Copyright Vespa.ai. Licensed under the terms of the Apache 2.0 license. See LICENSE in the

project root. -->

<services version="1.0" xmlns:deploy="vespa" xmlns:preprocess="properties"

minimum-required-vespa-version="8.519.55">

<container id="default" version="1.0">

<document-processing />

<document-api />

<!-- Uncomment this to use secret from Vespa Cloud Secret Store -->

<secrets>

<openai-api-key vault="sample-apps" name="openai-dev" />

</secrets>

<!-- Setup the client to OpenAI -->

<component id="openai" class="ai.vespa.llm.clients.OpenAI">

<config name="ai.vespa.llm.clients.llm-client">

<!-- Uncomment this to use secret from Vespa Cloud Secret Store -->

<apiKeySecretName>openai-api-key</apiKeySecretName>

</config>

</component>

<component id="nomicmb" type="hugging-face-embedder">

<transformer-model

url="https://data.vespa-cloud.com/onnx_models/nomic-ai-modernbert-embed-base/model.onnx" />

<transformer-token-type-ids />

<tokenizer-model

url="https://data.vespa-cloud.com/onnx_models/nomic-ai-modernbert-embed-base/tokenizer.json" />

<transformer-output>token_embeddings</transformer-output>

<max-tokens>8192</max-tokens>

<prepend>

<query>search_query:</query>

<document>search_document:</document>

</prepend>

</component>

<search>

<chain id="openai" inherits="vespa">

<searcher id="ai.vespa.search.llm.RAGSearcher">

<config name="ai.vespa.search.llm.llm-searcher">

<providerId>openai</providerId>

</config>

</searcher>

</chain>

</search>

<nodes>

<node hostalias="node1" />

</nodes>

</container>

<!-- See https://docs.vespa.ai/en/reference/services-content.html -->

<content id="content" version="1.0">

<min-redundancy>2</min-redundancy>

<documents>

<document type="doc" mode="index" />

</documents>

<nodes>

<node hostalias="node1" distribution-key="0" />

</nodes>

</content>

</services>

Deploy the application to Vespa Cloud¶

Now let's deploy the RAG Blueprint application to Vespa Cloud:

# This is only needed for CI.

VESPA_TEAM_API_KEY = os.getenv("VESPA_TEAM_API_KEY", None)

vespa_cloud = VespaCloud(

tenant=VESPA_TENANT_NAME,

application=VESPA_APPLICATION_NAME,

key_content=VESPA_TEAM_API_KEY,

application_root=application_root,

)

Setting application... Running: vespa config set application vespa-team.rag-blueprint.default Setting target cloud... Running: vespa config set target cloud Api-key found for control plane access. Using api-key.

Now, we will deploy the application to Vespa Cloud. This will take a few minutes, so feel free to skip ahead to the next section while waiting for the deployment to complete.

# Deploy the application

app: Vespa = vespa_cloud.deploy(disk_folder=application_root)

Deployment started in run 85 of dev-aws-us-east-1c for vespa-team.rag-blueprint. This may take a few minutes the first time. INFO [09:40:36] Deploying platform version 8.586.25 and application dev build 85 for dev-aws-us-east-1c of default ... INFO [09:40:36] Using CA signed certificate version 5 INFO [09:40:43] Session 379704 for tenant 'vespa-team' prepared and activated. INFO [09:40:43] ######## Details for all nodes ######## INFO [09:40:43] h125699b.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:40:43] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:40:43] --- storagenode on port 19102 has config generation 379704, wanted is 379704 INFO [09:40:43] --- searchnode on port 19107 has config generation 379704, wanted is 379704 INFO [09:40:43] --- distributor on port 19111 has config generation 379699, wanted is 379704 INFO [09:40:43] --- metricsproxy-container on port 19092 has config generation 379704, wanted is 379704 INFO [09:40:43] h125755a.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:40:43] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:40:43] --- container on port 4080 has config generation 379699, wanted is 379704 INFO [09:40:43] --- metricsproxy-container on port 19092 has config generation 379704, wanted is 379704 INFO [09:40:43] h97530b.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:40:43] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:40:43] --- logserver-container on port 4080 has config generation 379704, wanted is 379704 INFO [09:40:43] --- metricsproxy-container on port 19092 has config generation 379704, wanted is 379704 INFO [09:40:43] h119190c.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:40:43] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:40:43] --- container-clustercontroller on port 19050 has config generation 379699, wanted is 379704 INFO [09:40:43] --- metricsproxy-container on port 19092 has config generation 379699, wanted is 379704 INFO [09:40:51] Found endpoints: INFO [09:40:51] - dev.aws-us-east-1c INFO [09:40:51] |-- https://fe5fe13c.fe19121d.z.vespa-app.cloud/ (cluster 'default') INFO [09:40:51] Deployment of new application revision complete! Only region: aws-us-east-1c available in dev environment. Found mtls endpoint for default URL: https://fe5fe13c.fe19121d.z.vespa-app.cloud/ Application is up!

Uncomment secret reference and redeploy¶

Now is the time to assign permissions for this application (in this case ragblueprintdemo) to access the secret.

def uncomment_secrets(xml_content: str) -> str:

"""

Uncomments specific secret-related blocks in an XML string.

This function finds XML blocks that are commented out and contain either:

- <secrets>...</secrets> tags

- <apiKeySecretName>...</apiKeySecretName> tags

Args:

xml_content: A string containing the XML data with commented sections.

Returns:

The XML string with secret sections uncommented.

Example:

Input: "<!-- <secrets><key>value</key></secrets> -->"

Output: "<secrets><key>value</key></secrets>"

"""

import re

# Pattern to find commented-out <secrets> blocks

# Matches: <!-- <secrets>content</secrets> -->

secrets_pattern = re.compile(r"<!--\s*(<secrets>.*?</secrets>)\s*-->", re.DOTALL)

# Pattern to find commented-out <apiKeySecretName> blocks

# Matches: <!-- <apiKeySecretName>content</apiKeySecretName> -->

api_key_pattern = re.compile(

r"<!--\s*(<apiKeySecretName>.*?</apiKeySecretName>)\s*-->", re.DOTALL

)

# Uncomment the blocks by replacing with just the XML content

uncommented_content = secrets_pattern.sub(r"\1", xml_content)

uncommented_content = api_key_pattern.sub(r"\1", uncommented_content)

return uncommented_content

uncommented_services_content = uncomment_secrets(services_content)

display_md(uncommented_services_content, "xml")

<?xml version="1.0" encoding="utf-8"?>

<!-- Copyright Vespa.ai. Licensed under the terms of the Apache 2.0 license. See LICENSE in the

project root. -->

<services version="1.0" xmlns:deploy="vespa" xmlns:preprocess="properties"

minimum-required-vespa-version="8.519.55">

<container id="default" version="1.0">

<document-processing />

<document-api />

<!-- Uncomment this to use secret from Vespa Cloud Secret Store -->

<secrets>

<openai-api-key vault="sample-apps" name="openai-dev" />

</secrets>

<!-- Setup the client to OpenAI -->

<component id="openai" class="ai.vespa.llm.clients.OpenAI">

<config name="ai.vespa.llm.clients.llm-client">

<!-- Uncomment this to use secret from Vespa Cloud Secret Store -->

<apiKeySecretName>openai-api-key</apiKeySecretName>

</config>

</component>

<component id="nomicmb" type="hugging-face-embedder">

<transformer-model

url="https://data.vespa-cloud.com/onnx_models/nomic-ai-modernbert-embed-base/model.onnx" />

<transformer-token-type-ids />

<tokenizer-model

url="https://data.vespa-cloud.com/onnx_models/nomic-ai-modernbert-embed-base/tokenizer.json" />

<transformer-output>token_embeddings</transformer-output>

<max-tokens>8192</max-tokens>

<prepend>

<query>search_query:</query>

<document>search_document:</document>

</prepend>

</component>

<search>

<chain id="openai" inherits="vespa">

<searcher id="ai.vespa.search.llm.RAGSearcher">

<config name="ai.vespa.search.llm.llm-searcher">

<providerId>openai</providerId>

</config>

</searcher>

</chain>

</search>

<nodes>

<node hostalias="node1" />

</nodes>

</container>

<!-- See https://docs.vespa.ai/en/reference/services-content.html -->

<content id="content" version="1.0">

<min-redundancy>2</min-redundancy>

<documents>

<document type="doc" mode="index" />

</documents>

<nodes>

<node hostalias="node1" distribution-key="0" />

</nodes>

</content>

</services>

Let us write the uncommented services.xml file to the application package directory:

(application_root / "services.xml").write_text(uncommented_services_content)

2398

Now, we can redeploy the application to Vespa Cloud with the secret reference included in the services.xml file:

app: Vespa = vespa_cloud.deploy(disk_folder=application_root)

Deployment started in run 86 of dev-aws-us-east-1c for vespa-team.rag-blueprint. This may take a few minutes the first time. INFO [09:40:56] Deploying platform version 8.586.25 and application dev build 86 for dev-aws-us-east-1c of default ... INFO [09:40:56] Using CA signed certificate version 5 INFO [09:41:01] Session 379705 for tenant 'vespa-team' prepared and activated. INFO [09:41:01] ######## Details for all nodes ######## INFO [09:41:01] h125699b.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:41:01] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:41:01] --- storagenode on port 19102 has config generation 379704, wanted is 379705 INFO [09:41:01] --- searchnode on port 19107 has config generation 379705, wanted is 379705 INFO [09:41:01] --- distributor on port 19111 has config generation 379704, wanted is 379705 INFO [09:41:01] --- metricsproxy-container on port 19092 has config generation 379705, wanted is 379705 INFO [09:41:01] h125755a.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:41:01] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:41:01] --- container on port 4080 has config generation 379705, wanted is 379705 INFO [09:41:01] --- metricsproxy-container on port 19092 has config generation 379705, wanted is 379705 INFO [09:41:01] h97530b.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:41:01] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:41:01] --- logserver-container on port 4080 has config generation 379705, wanted is 379705 INFO [09:41:01] --- metricsproxy-container on port 19092 has config generation 379705, wanted is 379705 INFO [09:41:01] h119190c.dev.us-east-1c.aws.vespa-cloud.net: expected to be UP INFO [09:41:01] --- platform vespa/cloud-tenant-rhel8:8.586.25 INFO [09:41:01] --- container-clustercontroller on port 19050 has config generation 379705, wanted is 379705 INFO [09:41:01] --- metricsproxy-container on port 19092 has config generation 379705, wanted is 379705 INFO [09:41:08] Found endpoints: INFO [09:41:08] - dev.aws-us-east-1c INFO [09:41:08] |-- https://fe5fe13c.fe19121d.z.vespa-app.cloud/ (cluster 'default') INFO [09:41:08] Deployment of new application revision complete! Only region: aws-us-east-1c available in dev environment. Found mtls endpoint for default URL: https://fe5fe13c.fe19121d.z.vespa-app.cloud/ Application is up!

Feed Sample Data¶

The RAG Blueprint comes with sample data. Let's download and feed it to test our deployment:

doc_file = repo_root / "dataset" / "docs.jsonl"

with open(doc_file, "r") as f:

docs = [json.loads(line) for line in f.readlines()]

docs[:2]

[{'put': 'id:doc:doc::1',

'fields': {'created_timestamp': 1675209600,

'modified_timestamp': 1675296000,

'text': '# SynapseCore Module: Custom Attention Implementation\n\n```python\nimport torch\nimport torch.nn as nn\nimport torch.nn.functional as F\n\nclass CustomAttention(nn.Module):\n def __init__(self, hidden_dim):\n super(CustomAttention, self).__init__()\n self.hidden_dim = hidden_dim\n self.query_layer = nn.Linear(hidden_dim, hidden_dim)\n self.key_layer = nn.Linear(hidden_dim, hidden_dim)\n self.value_layer = nn.Linear(hidden_dim, hidden_dim)\n # More layers and logic here\n\n def forward(self, query_input, key_input, value_input, mask=None):\n # Q, K, V projections\n Q = self.query_layer(query_input)\n K = self.key_layer(key_input)\n V = self.value_layer(value_input)\n\n # Scaled Dot-Product Attention\n attention_scores = torch.matmul(Q, K.transpose(-2, -1)) / (self.hidden_dim ** 0.5)\n if mask is not None:\n attention_scores = attention_scores.masked_fill(mask == 0, -1e9)\n \n attention_probs = F.softmax(attention_scores, dim=-1)\n context_vector = torch.matmul(attention_probs, V)\n return context_vector, attention_probs\n\n# Example Usage:\n# attention_module = CustomAttention(hidden_dim=512)\n# output, probs = attention_module(q_tensor, k_tensor, v_tensor)\n```\n\n## Design Notes:\n- Optimized for speed with batched operations.\n- Includes optional masking for variable sequence lengths.\n## <MORE_TEXT:HERE>',

'favorite': True,

'last_opened_timestamp': 1717308000,

'open_count': 25,

'title': 'custom_attention_impl.py.md',

'id': '1'}},

{'put': 'id:doc:doc::2',

'fields': {'created_timestamp': 1709251200,

'modified_timestamp': 1709254800,

'text': "# YC Workshop Notes: Scaling B2B Sales (W25)\nDate: 2025-03-01\nSpeaker: [YC Partner Name]\n\n## Key Takeaways:\n1. **ICP Definition is Crucial:** Don't try to sell to everyone. Narrow down your Ideal Customer Profile.\n - Characteristics: Industry, company size, pain points, decision-maker roles.\n2. **Outbound Strategy:**\n - Personalized outreach > Mass emails.\n - Tools mentioned: Apollo.io, Outreach.io.\n - Metrics: Open rates, reply rates, meeting booked rates.\n3. **Sales Process Stages:**\n - Prospecting -> Qualification -> Demo -> Proposal -> Negotiation -> Close.\n - Define clear entry/exit criteria for each stage.\n4. **Value Proposition:** Clearly articulate how you solve the customer's pain and deliver ROI.\n5. **Early Hires:** First sales hire should be a 'hunter-farmer' hybrid if possible, or a strong individual contributor.\n\n## Action Items for SynapseFlow:\n- [ ] Refine ICP based on beta user feedback.\n- [ ] Experiment with a small, targeted outbound campaign for 2 specific verticals.\n- [ ] Draft initial sales playbook outline.\n## <MORE_TEXT:HERE>",

'favorite': True,

'last_opened_timestamp': 1717000000,

'open_count': 12,

'title': 'yc_b2b_sales_workshop_notes.md',

'id': '2'}}]

vespa_feed = []

for doc in docs:

vespa_doc = doc.copy()

vespa_doc["id"] = doc["fields"]["id"]

vespa_doc.pop("put")

vespa_feed.append(vespa_doc)

vespa_feed[:2]

[{'fields': {'created_timestamp': 1675209600,

'modified_timestamp': 1675296000,

'text': '# SynapseCore Module: Custom Attention Implementation\n\n```python\nimport torch\nimport torch.nn as nn\nimport torch.nn.functional as F\n\nclass CustomAttention(nn.Module):\n def __init__(self, hidden_dim):\n super(CustomAttention, self).__init__()\n self.hidden_dim = hidden_dim\n self.query_layer = nn.Linear(hidden_dim, hidden_dim)\n self.key_layer = nn.Linear(hidden_dim, hidden_dim)\n self.value_layer = nn.Linear(hidden_dim, hidden_dim)\n # More layers and logic here\n\n def forward(self, query_input, key_input, value_input, mask=None):\n # Q, K, V projections\n Q = self.query_layer(query_input)\n K = self.key_layer(key_input)\n V = self.value_layer(value_input)\n\n # Scaled Dot-Product Attention\n attention_scores = torch.matmul(Q, K.transpose(-2, -1)) / (self.hidden_dim ** 0.5)\n if mask is not None:\n attention_scores = attention_scores.masked_fill(mask == 0, -1e9)\n \n attention_probs = F.softmax(attention_scores, dim=-1)\n context_vector = torch.matmul(attention_probs, V)\n return context_vector, attention_probs\n\n# Example Usage:\n# attention_module = CustomAttention(hidden_dim=512)\n# output, probs = attention_module(q_tensor, k_tensor, v_tensor)\n```\n\n## Design Notes:\n- Optimized for speed with batched operations.\n- Includes optional masking for variable sequence lengths.\n## <MORE_TEXT:HERE>',

'favorite': True,

'last_opened_timestamp': 1717308000,

'open_count': 25,

'title': 'custom_attention_impl.py.md',

'id': '1'},

'id': '1'},

{'fields': {'created_timestamp': 1709251200,

'modified_timestamp': 1709254800,

'text': "# YC Workshop Notes: Scaling B2B Sales (W25)\nDate: 2025-03-01\nSpeaker: [YC Partner Name]\n\n## Key Takeaways:\n1. **ICP Definition is Crucial:** Don't try to sell to everyone. Narrow down your Ideal Customer Profile.\n - Characteristics: Industry, company size, pain points, decision-maker roles.\n2. **Outbound Strategy:**\n - Personalized outreach > Mass emails.\n - Tools mentioned: Apollo.io, Outreach.io.\n - Metrics: Open rates, reply rates, meeting booked rates.\n3. **Sales Process Stages:**\n - Prospecting -> Qualification -> Demo -> Proposal -> Negotiation -> Close.\n - Define clear entry/exit criteria for each stage.\n4. **Value Proposition:** Clearly articulate how you solve the customer's pain and deliver ROI.\n5. **Early Hires:** First sales hire should be a 'hunter-farmer' hybrid if possible, or a strong individual contributor.\n\n## Action Items for SynapseFlow:\n- [ ] Refine ICP based on beta user feedback.\n- [ ] Experiment with a small, targeted outbound campaign for 2 specific verticals.\n- [ ] Draft initial sales playbook outline.\n## <MORE_TEXT:HERE>",

'favorite': True,

'last_opened_timestamp': 1717000000,

'open_count': 12,

'title': 'yc_b2b_sales_workshop_notes.md',

'id': '2'},

'id': '2'}]

Now, let us feed the data to Vespa.

If you have a large dataset, you could also do this async, with feed_async_iterable(), see Feeding Vespa cloud for a detailed comparison.

from vespa.io import VespaResponse

def callback(response: VespaResponse, id: str):

if not response.is_successful():

print(

f"Failed to feed document {id} with status code {response.status_code}: Reason {response.get_json()}"

)

# Feed data into Vespa synchronously

app.feed_iterable(vespa_feed, schema=VESPA_SCHEMA_NAME, callback=callback)

Test a query to the Vespa application¶

Let us test some queries to see if the application is working as expected. We will use one of the pre-configured query profiles, which we will explain in more detail later. For now, let us just see that we can get some results back from the application.

query = "What is SynapseFlows strategy"

body = {

"query": query,

"queryProfile": "hybrid",

"hits": 2,

}

with app.syncio() as sess:

response = sess.query(body)

response.json

{'root': {'id': 'toplevel',

'relevance': 1.0,

'fields': {'totalCount': 100},

'coverage': {'coverage': 100,

'documents': 100,

'full': True,

'nodes': 1,

'results': 1,

'resultsFull': 1},

'children': [{'id': 'index:content/0/e369853debf684767dff1f16',

'relevance': 1.7111883427143333,

'source': 'content',

'fields': {'sddocname': 'doc',

'chunks_top3': ['# YC Application Draft Snippets - SynapseFlow (Late 2024)\n\n**Q: Describe what your company does in 50 characters or less.**\n- AI model deployment made easy for developers.\n- Effortless MLOps for startups.\n- Deploy ML models in minutes, not weeks.\n\n**Q: What is your company going to make?**\nSynapseFlow is building a PaaS solution that radically simplifies the deployment, management, and scaling of machine learning models. We provide a developer-first API and intuitive UI that abstracts away the complexities of MLOps infrastructure (Kubernetes, model servers, monitoring), allowing data scientists and developers ',

"to focus on building models, not wrestling with ops. Our vision is to be the Heroku for AI.\n\n**Q: Why did you pick this idea to work on?**\nAs an AI engineer, I've experienced firsthand the immense friction and time wasted in operationalizing ML models. Existing solutions are often too complex for smaller teams (e.g., full SageMaker/Vertex AI) or lack the flexibility needed for custom model development. We believe there's a huge unmet need for a simple, powerful, and affordable MLOps platform.\n\n## <MORE_TEXT:HERE> (More Q&A drafts, team background notes)"],

'summaryfeatures': {'top_3_chunk_sim_scores': {'type': 'tensor<float>(chunk{})',

'cells': {'0': 0.36166757345199585, '1': 0.21831661462783813}},

'vespa.summaryFeatures.cached': 0.0}}},

{'id': 'index:content/0/98f13708aca18c358d9d52d0',

'relevance': 1.309791587164871,

'source': 'content',

'fields': {'sddocname': 'doc',

'chunks_top3': ["# Ideas for SynapseFlow Blog Post - 'Demystifying MLOps'\n\n**Target Audience:** Developers, data scientists new to MLOps, product managers.\n**Goal:** Explain what MLOps is, why it's important, and how SynapseFlow helps.\n\n## Outline:\n1. **Introduction: The AI/ML Development Lifecycle is More Than Just Model Training**\n * Analogy: Building a model is like writing code; MLOps is like DevOps for ML.\n2. **What is MLOps? (The Core Pillars)**\n * Data Management (Versioning, Lineage, Quality)\n * Experiment Tracking & Model Versioning\n * CI/CD for ML (Continuous Integration, Continuous Delivery, Continuous Training)\n * Model Deployment & Serving\n * Monitoring & Observability (Performance, Drift, Data Quality)\n * Governance & Reproducibility\n3. **Why is MLOps Hard? (The Challenges)",

"**\n * Complexity of the ML lifecycle.\n * Bridging the gap between data science and engineering.\n * Tooling fragmentation.\n * Need for specialized skills.\n4. **How SynapseFlow Addresses These Challenges (Subtle Product Weave-in)**\n * Focus on ease of deployment (our current strength).\n * Streamlined workflow from experiment to production (our vision).\n * (Mention specific features that align with MLOps pillars without being overly salesy).\n5. **Getting Started with MLOps - Practical Tips**\n * Start simple, iterate.\n * Focus on automation early.\n * Choose tools that fit your team's scale and expertise.\n6. **Conclusion: MLOps is an Enabler for Realizing AI Value**\n\n## <MORE_TEXT:HERE> (Draft paragraphs, links to reference articles, potential graphics ideas)"],

'summaryfeatures': {'top_3_chunk_sim_scores': {'type': 'tensor<float>(chunk{})',

'cells': {'0': 0.3064674735069275, '1': 0.29259079694747925}},

'vespa.summaryFeatures.cached': 0.0}}}]}}

And by changing to the rag query profile, and adding the streaming=True parameter, we can stream the results from the LLM as server-sent events (SSE).

query = "What is SynapseFlows strategy"

body = {

"query": query,

"queryProfile": "rag",

"hits": 2,

}

resp_string = "" # Adding a string variable to use for asserting the response in CI.

with app.syncio() as sess:

stream_resp = sess.query(

body,

streaming=True,

)

for line in stream_resp:

if line.startswith("data: "):

event = json.loads(line[6:])

token = event.get("token", "")

resp_string += token

print(token, end="")

assert len(resp_string) > 10, "Response string should be longer than 10 characters."

SynapseFlow's strategy revolves around simplifying the deployment, management, and scaling of machine learning (ML) models through a developer-first platform-as-a-service (PaaS) solution. The key elements of their strategy include: 1. **Developer-Focused Solution:** SynapseFlow aims to provide a user-friendly API and intuitive user interface that abstracts the complexities associated with MLOps infrastructure (such as Kubernetes and model servers). This allows developers and data scientists to focus primarily on building models rather than dealing with operational challenges. 2. **Addressing Market Gaps:** The founders identified a significant pain point in the existing MLOps landscape, particularly for smaller teams. Many current solutions are either too complex or not flexible enough for custom model development. SynapseFlow targets this unmet need for a straightforward, powerful, and cost-effective platform. 3. **Vision of Simplified MLOps:** By positioning itself as "the Heroku for AI," SynapseFlow aims to offer an all-in-one solution that streamlines the workflow from experimentation to production, thus enhancing efficiency and speed in ML project deployment. 4. **Education and Support:** Their strategy also includes educational initiatives, as outlined in their blog post ideas targeting developers and product managers new to MLOps. By demystifying MLOps and discussing its challenges and the way SynapseFlow addresses them, they plan to enhance user understanding and adoption of their platform. 5. **Continuous Improvement:** SynapseFlow emphasizes a relentless focus on ease of deployment and improving automation capabilities, suggesting an iterative approach to platform development that responds to user feedback and evolving industry needs. Overall, SynapseFlow's strategy is centered on providing user-friendly solutions that reduce operational complexity, enabling faster deployment of machine learning models and supporting teams in successfully realizing the value of AI.

Great, we got some results. The quality is not very good yet, but we will show how to improve it in the next steps.

But first, let us explain the use case we are trying to solve with this RAG application.

Our use case¶

The sample use case is a document search application, for a user who wants to get answers and insights quickly from a document collection containing company documents, notes, learning material, training logs. To make the blueprint more realistic, we required a dataset with more structured fields than are commonly found in public datasets. Therefore, we used a Large Language Model (LLM) to generate a custom one.

It is a toy example, with only 100 documents, but we think it will illustrate the necessary concepts. You can also feel confident that the blueprint will provide a starting point that can scale as you want, with minimal changes.

Below you can see a sample document from the dataset.

import json

docs_file = repo_root / "dataset" / "docs.jsonl"

with open(docs_file) as f:

docs = [json.loads(line) for line in f]

docs[10]

{'put': 'id:doc:doc::11',

'fields': {'created_timestamp': 1698796800,

'modified_timestamp': 1698796800,

'text': "# Journal Entry - 2024-11-01\n\nFeeling the YC pressure cooker, but in a good way. The pace is insane. It reminds me of peaking for a powerlifting meet – everything has to be precise, every session counts, and you're constantly pushing your limits.\n\nThinking about **periodization** in lifting – how you structure macrocycles, mesocycles, and microcycles. Can this apply to startup sprints? We have our big YC Demo Day goal (macro), then maybe 2-week sprints are mesocycles, and daily tasks are microcycles. Need to ensure we're not just redlining constantly but building in phases of intense work, focused development, and even 'deload' (strategic rest/refinement) to avoid burnout and make sustainable progress.\n\n**RPE (Rate of Perceived Exertion)** is another concept. In the gym, it helps auto-regulate training based on how you feel. For the startup, maybe we need an RPE check for the team? Are we pushing too hard on a feature that's yielding low returns (high RPE, low ROI)? Can we adjust the 'load' (scope) or 'reps' (iterations) based on team capacity and feedback?\n\nIt's interesting how the discipline and structured thinking from strength training can offer mental models for tackling the chaos of a startup. Both require consistency, grit, and a willingness to fail and learn.\n\n## <MORE_TEXT:HERE> (More reflections on YC, specific project challenges)",

'favorite': False,

'last_opened_timestamp': 1700000000,

'open_count': 5,

'title': 'journal_2024_11_01_yc_and_lifting.md',

'id': '11'}}

In order to evaluate the quality of the RAG application, we also need a set of representative queries, with annotated relevant documents. Crucially, you need a set of representative queries that thoroughly cover your expected use case. More is better, but some eval is always better than none.

We used gemini-2.5-pro to create our queries and relevant document labels. Please check out our blog post to learn more about using LLM-as-a-judge.

We decided to generate some queries that need several documents to provide a good answer, and some that only need one document.

If these queries are representative of the use case, we will show that they can be a great starting point for creating an (initial) ranking expression that can be used for retrieving and ranking candidate documents. But, it can (and should) also be improved, for example by collecting user interaction data, human labeling and/ or using an LLM to generate relevance feedback following the initial ranking expression.

queries_file = repo_root / "queries" / "queries.json"

with open(queries_file) as f:

queries = json.load(f)

queries[10]

{'query_id': 'alex_q_11',

'query_text': "Where's that journal entry where I compared YC to powerlifting?",

'category': 'Navigational - Personal',

'description': 'Finding a specific personal reflection in his journal.',

'relevant_document_ids': ['11', '58', '100']}

Data modeling¶

Here is the schema that we will use for our sample application.

schema_file = repo_root / "app" / "schemas" / "doc.sd"

schema_content = schema_file.read_text()

display_md(schema_content)

txt

# Copyright Vespa.ai. Licensed under the terms of the Apache 2.0 license. See LICENSE in the project root.

schema doc {

document doc {

field id type string {

indexing: summary | attribute

}

field title type string {

indexing: index | summary

index: enable-bm25

}

field text type string {

}

field created_timestamp type long {

indexing: attribute | summary

}

field modified_timestamp type long {

indexing: attribute | summary

}

field last_opened_timestamp type long {

indexing: attribute | summary

}

field open_count type int {

indexing: attribute | summary

}

field favorite type bool {

indexing: attribute | summary

}

}

field title_embedding type tensor<int8>(x[96]) {

indexing: input title | embed | pack_bits | attribute | index

attribute {

distance-metric: hamming

}

}

field chunks type array<string> {

indexing: input text | chunk fixed-length 1024 | summary | index

index: enable-bm25

}

field chunk_embeddings type tensor<int8>(chunk{}, x[96]) {

indexing: input text | chunk fixed-length 1024 | embed | pack_bits | attribute | index

attribute {

distance-metric: hamming

}

}

fieldset default {

fields: title, chunks

}

document-summary no-chunks {

summary id {}

summary title {}

summary created_timestamp {}

summary modified_timestamp {}

summary last_opened_timestamp {}

summary open_count {}

summary favorite {}

summary chunks {}

}

document-summary top_3_chunks {

from-disk

summary chunks_top3 {

source: chunks

select-elements-by: top_3_chunk_sim_scores #this needs to be added a summary-feature to the rank-profile

}

}

}

Keep reading for an explanation and reasoning behind the choices in the schema.

Picking your searchable unit¶

When building a RAG application, your first key decision is choosing the "searchable unit." This is the basic block of information your system will search through and return as context to the LLM. For instance, if you have millions of documents, some hundreds of pages long, what should be your searchable unit?

Consider these points when selecting your searchable unit:

- Too fine-grained (e.g., individual sentences or very small paragraphs):

- Leads to duplication of context and metadata across many small units.

- May result in units lacking sufficient context for the LLM to make good selections or generate relevant responses.

- Increases overhead for managing many small document units.

- Too coarse-grained (e.g., very long chapters or entire large documents):

- Can cause performance issues due to the size of the units being processed.

- May lead to some large documents appearing relevant to too many queries, reducing precision.

- If you embed the whole document, a too large context will lead to reduced retrieval quality.

We recommend erring on the side of using slightly larger units.

- LLMs are increasingly capable of handling larger contexts.

- In Vespa, you can index larger units, while avoiding data duplication and performance issues, by returning only the most relevant parts.

With Vespa, it is now possible to return only the top k most relevant chunks of a document, and include and combine both document-level and chunk-level features in ranking.

Chunk selection¶

Assume you have chosen a document as your searchable unit. Your documents may then contain text index fields of highly variable lengths. Consider for example a corpus of web pages. Some might be very long, while the average is well within the recommended size. See scaling retrieval size for more details.

While we recommend implementing guards against too long documents in your feeding pipeline, you still probably do not want to return every chunk of the top k documents to an LLM for RAG.

In Vespa, we now have a solution for this problem. Below, we show how you can score both documents as well as individual chunks, and use that score to select the best chunks to be returned in a summary, instead of returning all chunks belonging to the top k ranked documents.

Compute closeness per chunk in a ranking function; use elementwise(bm25(chunks), i, double) for a per-chunk text signal. See rank feature reference

This allows you to pick a large document as the searchable unit, while still addressing the potential drawbacks many encounter as follows:

- Pick your (larger) document as your searchable unit.

- Chunk the text-fields automatically on indexing.

- Embed each chunk (enabled through Vespa's multivector support)

- Calculate chunk-level features (e.g. bm25 and embedding similarity) and document-level features. Combine as you want.

- Limit the actual chunks that are returned to the ones that are actually relevant context for the LLM.

This allows you to index larger units, while avoiding data duplication and performance issues, by returning only the most relevant parts.

Vespa also supports automatic chunking in the indexing language.

Here are the parts of the schema, which defines the searchable unit as a document with a text field, and automatically chunks it into smaller parts of 1024 characters, which each are embedded and indexed separately:

txt

field chunks type array<string> {

indexing: input text | chunk fixed-length 1024 | summary | index

index: enable-bm25

}

field chunk_embeddings type tensor<int8>(chunk{}, x[96]) {

indexing: input text | chunk fixed-length 1024 | embed | pack_bits | attribute | index

attribute {

distance-metric: hamming

}

}

In Vespa, we can specify which chunks to be returned with a summary feature, see docs for details. For this blueprint, we will return the top 3 chunks based on the similarity score of the chunk embeddings, which is calculated in the ranking phase. Note that this feature could be any chunk-level summary feature defined in your rank-profile.

Here is how the summary feature is calculated in the rank-profile:

txt

# This function unpack the bits of each dimenrion of the mapped chunk_embeddings attribute tensor

function chunk_emb_vecs() {

expression: unpack_bits(attribute(chunk_embeddings))

}

# This function calculate the dot product between the query embedding vector and the chunk embeddings (both are now float) over the x dimension

function chunk_dot_prod() {

expression: reduce(query(float_embedding) * chunk_emb_vecs(), sum, x)

}

# This function calculate the L2 normalized length of an input tensor

function vector_norms(t) {

expression: sqrt(sum(pow(t, 2), x))

}

# Here we calculate cosine similarity by dividing the dot product by the product of the L2 normalized query embedding and document embeddings

function chunk_sim_scores() {

expression: chunk_dot_prod() / (vector_norms(chunk_emb_vecs()) * vector_norms(query(float_embedding)))

}

function top_3_chunk_text_scores() {

expression: top(3, chunk_text_scores())

}

function top_3_chunk_sim_scores() {

expression: top(3, chunk_sim_scores())

}

summary-features {

top_3_chunk_sim_scores

}

The ranking expression may seem a bit complex, as we chose to embed each chunk independently, store the embeddings in a binarized format, and then unpack them to calculate similarity based on their float representations. For single dimension dense vector similarity between same-precision embeddings, this can be simplified significantly using the closeness) convenience function.

Note that we want to use the float-representation of the query-embedding, and thus also need to convert the binary embedding of the chunks to float. After that, we can calculate the similarity score between the query embedding and the chunk embeddings using cosine similarity (the dot product, and then normalize it by the norms of the embeddings).

See ranking expressions for more details on the top-function, and other functions available for ranking expressions.

Now, we can use this summary feature in our document summary to return the top 3 chunks of the document, which will be used as context for the LLM. Note that we can also define a document summary that returns all chunks, which might be useful for another use case, such as deep research.

txt

document-summary top_3_chunks {

from-disk

summary chunks_top3 {

source: chunks

select-elements-by: top_3_chunk_sim_scores #this needs to be added a summary-feature to the rank-profile

}

}

Use multiple text fields, consider multiple embeddings¶

We recommend indexing different textual content as separate indexes. These can be searched together, using field-sets

In our schema, this is exemplified by the sections below, which define the title and chunks fields as separate indexed text fields.

txt

...

field title type title {

indexing: index | summary

index: enable-bm25

}

field chunks type array<string> {

indexing: input text | chunk fixed-length 1024 | summary | index

index: enable-bm25

}

Whether you should have separate embedding fields, depends on whether the added memory usage is justified by the quality improvement you could get from the additional embedding field.

We choose to index both a title_embedding and a chunk_embeddings field for this blueprint, as we aim to minimize cost by embedding the binary vectors.

txt

field title_embedding type tensor<int8>(title{}, x[96]) {

indexing: input text | embed | pack_bits | attribute | index

attribute {

distance-metric: hamming

}

}

field chunk_embeddings type tensor<int8>(chunk{}, x[96]) {

indexing: input text | chunk fixed-length 1024 | embed | pack_bits | attribute | index

attribute {

distance-metric: hamming

}

}

Indexing several embedding fields may not be worth the cost for you. Evaluate whether the cost-quality trade-off is worth it for your application.

If you have different vector space representations of your document (e.g images), indexing them separately is likely worth it, as they are likely to provide signals that are complementary to the text-based embeddings.

Model Metadata and Signals Using Structured Fields¶

We recommend modeling metadata and signals as structured fields in your schema. Below are some general recommendations, as well as the implementation in our blueprint schema.

Metadata — knowledge about your data:

- Authors, publish time, source, links, category, price, …

- Usage: filters, ranking, grouping/aggregation

- Index only metadata that are strong filters

In our blueprint schema, we include these metadata fields to demonstrate these concepts:

id- document identifiertitle- document name/filename for display and text matchingcreated_timestamp,modified_timestamp- temporal metadata for filtering and ranking by recency

Signals — observations about your data:

- Popularity, quality, spam probability, click_probability, …

- Usage: ranking

- Often updated separately via partial updates

- Multiple teams can add their own signals independently

In our blueprint schema, we include several of these signals:

last_opened_timestamp- user engagement signal for personalizationopen_count- popularity signal indicating document importancefavorite- explicit user preference signal, can be used for boosting relevant content

These fields are configured as attribute | summary to enable efficient filtering, sorting, and grouping operations while being returned in search results. The timestamp fields allow for temporal filtering (e.g., "recent documents") and recency-based ranking, while usage signals like open_count and favorite can boost frequently accessed or explicitly marked important documents.

Consider parent-child relationships for low-cardinality metadata. Most large scale RAG application schemas contain at least a hundred structured fields.

LLM-generation with OpenAI-client¶

Vespa supports both Local LLMs, and any OpenAI-compatible API for LLM generation. For details, see LLMs in Vespa

The recommended way to provide an API key is by using the secret store in Vespa Cloud.

To enable this, you need to create a vault (if you don't have one already) and a secret through the Vespa Cloud console. If your vault is named sample-apps and contains a secret with the name openai-api-key, you would use the following configuration in your services.xml to set up the OpenAI client to use that secret:

<secrets>

<openai-api-key vault="sample-apps" name="openai-dev" />

</secrets>

<!-- Setup the client to OpenAI -->

<component id="openai" class="ai.vespa.llm.clients.OpenAI">

<config name="ai.vespa.llm.clients.llm-client">

<apiKeySecretRef>openai-api-key</apiKeySecretRef>

</config>

</component>

Alternatively, for local deployments, you can set the X-LLM-API-KEY header in your query to use the OpenAI client for generation.

To test generation using the OpenAI client, post a query that runs the openai search chain, with format=sse. (Use format=json for a streaming json response including both the search hits and the LLM-generated tokens.)

vespa query \

--timeout 60 \

--header="X-LLM-API-KEY:<your-api-key>" \

yql='select *

from doc

where userInput(@query) or

({label:"title_label", targetHits:100}nearestNeighbor(title_embedding, embedding)) or

({label:"chunks_label", targetHits:100}nearestNeighbor(chunk_embeddings, embedding))' \

query="Summarize the key architectural decisions documented for SynapseFlow's v0.2 release." \

searchChain=openai \

format=sse \

hits=5

Structuring your vespa application¶

This section provides recommendations for structuring your Vespa application package. See also the application package docs for more details on the application package structure. Note that this is not mandatory, and it might be simpler to start without query profiles and rank profiles, but as you scale out your application, it will be beneficial to have a well-structured application package.

Consider the following structure for our application package:

# Let's explore the RAG Blueprint application structure

print(tree("src/rag-blueprint"))

/Users/thomas/Repos/pyvespa/docs/sphinx/source/examples/src/rag-blueprint ├── app │ ├── models │ │ └── lightgbm_model.json │ ├── schemas │ │ ├── doc │ │ │ ├── base-features.profile │ │ │ ├── collect-second-phase.profile │ │ │ ├── collect-training-data.profile │ │ │ ├── learned-linear.profile │ │ │ ├── match-only.profile │ │ │ └── second-with-gbdt.profile │ │ └── doc.sd │ ├── search │ │ └── query-profiles │ │ ├── deepresearch-with-gbdt.xml │ │ ├── deepresearch.xml │ │ ├── hybrid-with-gbdt.xml │ │ ├── hybrid.xml │ │ ├── rag-with-gbdt.xml │ │ └── rag.xml │ ├── security │ │ └── clients.pem │ └── services.xml ├── dataset │ ├── docs.jsonl │ ├── queries.json │ └── test_queries.json ├── eval │ ├── output │ │ ├── Vespa-training-data_match_first_phase_20250623_133241.csv │ │ ├── Vespa-training-data_match_first_phase_20250623_133241_logreg_coefficients.txt │ │ ├── Vespa-training-data_match_rank_second_phase_20250623_135819.csv │ │ └── Vespa-training-data_match_rank_second_phase_20250623_135819_feature_importance.csv │ ├── collect_pyvespa.py │ ├── evaluate_match_phase.py │ ├── evaluate_ranking.py │ ├── pyproject.toml │ ├── README.md │ ├── resp.json │ ├── train_lightgbm.py │ └── train_logistic_regression.py ├── deploy-locally.md ├── generation.md ├── query-profiles.md ├── README.md └── relevance.md

You can see that we have separated the query profiles, and rank profiles into their own directories.

Manage queries in query profiles¶

Query profiles let you maintain collections of query parameters in one file. Clients choose a query profile → the profile sets everything else. This lets us change behavior for a use case without involving clients.

Let us take a closer look at 3 of the query profiles in our sample application.

hybridragdeepresearch

hybrid query profile¶

This query profile will be the one used by clients for traditional search, where the user is presented a limited number of hits. Our other query profiles will inherit this one (but may override some fields).

qp_dir = repo_root / "app" / "search" / "query-profiles"

hybrid_qp = (qp_dir / "hybrid.xml").read_text()

display_md(hybrid_qp, tag="xml")

<?xml version="1.0" encoding="utf-8"?>

<!-- Copyright Vespa.ai. Licensed under the terms of the Apache 2.0 license. See LICENSE in the

project root. -->

<!--

match_avg_top_3_chunk_sim_scores : 13.383840

match_avg_top_3_chunk_text_scores : 0.203145

match_bm25(chunks) : 0.159914

match_bm25(title) : 0.191867

match_max_chunk_sim_scores : 10.067169

match_max_chunk_text_scores : 0.153392

Intercept : -7.798639

-->

<query-profile id="hybrid">

<field name="schema">doc</field>

<field name="ranking.features.query(embedding)">embed(@query)</field>

<field name="ranking.features.query(float_embedding)">embed(@query)</field>

<field name="ranking.features.query(intercept)">-7.798639</field>

<field name="ranking.features.query(avg_top_3_chunk_sim_scores_param)">13.383840</field>

<field name="ranking.features.query(avg_top_3_chunk_text_scores_param)">0.203145</field>

<field name="ranking.features.query(bm25_chunks_param)">0.159914</field>

<field name="ranking.features.query(bm25_title_param)">0.191867</field>

<field name="ranking.features.query(max_chunk_sim_scores_param)">10.067169</field>

<field name="ranking.features.query(max_chunk_text_scores_param)">0.153392</field>

<field name="yql">

select *

from %{schema}

where userInput(@query) or

({label:"title_label", targetHits:100}nearestNeighbor(title_embedding, embedding)) or

({label:"chunks_label", targetHits:100}nearestNeighbor(chunk_embeddings, embedding))

</field>

<field name="hits">10</field>

<field name="ranking.profile">learned-linear</field>

<field name="presentation.summary">top_3_chunks</field>

</query-profile>

rag query profile¶

This will be the query profile where the openai searchChain will be added, to generate a response based on the retrieved context.

Here, we set some configuration that are specific to this use case.

rag_blueprint_qp = (qp_dir / "rag.xml").read_text()

display_md(rag_blueprint_qp, tag="xml")

<?xml version="1.0" encoding="utf-8"?>

<!-- Copyright Vespa.ai. Licensed under the terms of the Apache 2.0 license. See LICENSE in the

project root. -->

<query-profile id="rag" inherits="hybrid">

<field name="hits">50</field>

<field name="searchChain">openai</field>

<field name="presentation.format">sse</field>

</query-profile>

deepresearch query profile¶

Again, we will inherit from the hybrid query profile, but override with a targetHits value of 10 000 (original was 100) that prioritizes recall over latency.

We will also increase number of hits to be returned, and increase the timeout to 5 seconds.

deep_qp = (qp_dir / "deepresearch.xml").read_text()

display_md(deep_qp, tag="xml")

<?xml version="1.0" encoding="utf-8"?>

<!-- Copyright Vespa.ai. Licensed under the terms of the Apache 2.0 license. See LICENSE in the

project root. -->

<query-profile id="deepresearch" inherits="hybrid">

<field name="yql">

select *

from %{schema}

where userInput(@query) or

({label:"title_label", targetHits:10000}nearestNeighbor(title_embedding, embedding)) or

({label:"chunks_label", targetHits:10000}nearestNeighbor(chunk_embeddings, embedding))

</field>

<field name="hits">100</field>

<field name="timeout">5s</field>

</query-profile>

We will leave out the LLM-generation for this one, and let an LLM agent on the client side be responsible for using this API call as a tool, and to determine whether enough relevant context to answer has been retrieved.

Note that the targetHits parameter set here does not really makes sense until your dataset reach a certain scale.

As we add more rank-profiles, we can also inherit the existing query profiles, only to override the ranking.profile field to use a different rank profile. This is what we have done for the rag-with-gbdt and deepresearch-with-gbdt query profiles, which will use the second-with-gbdt rank profile instead of the learned-linear rank profile.

rag_gbdt_qp = (qp_dir / "rag-with-gbdt.xml").read_text()

display_md(rag_gbdt_qp, tag="xml")

<?xml version="1.0" encoding="utf-8"?>

<!-- Copyright Vespa.ai. Licensed under the terms of the Apache 2.0 license. See LICENSE in the

project root. -->

<query-profile id="rag-with-gbdt" inherits="hybrid-with-gbdt">

<field name="hits">50</field>

<field name="searchChain">openai</field>

<field name="presentation.format">sse</field>

</query-profile>

Separating out rank profiles¶

To build a great RAG application, assume you’ll need many ranking models. This will allow you to bucket-test alternatives continuously and to serve different use cases, including data collection for different phases, and the rank profiles to be used in production.

Separate common functions/setup into parent rank profiles and use .profile files.

Phased ranking in Vespa¶

Before we move on, it might be useful to recap Vespa´s phased ranking approach.

Below is a schematic overview of how to think about retrieval and ranking for this RAG blueprint. Since we are developing this as a tutorial using a small toy dataset, the application can be deployed in a single machine, using a single docker container, where only one container node and one container node will run. This is obviously not the case for most real-world RAG applications, so this is cruical to have in mind as you want to scale your application.

It is worth noting that parameters such as targetHits (for the match phase) and rerank-count (for first and second phase) are applied per content node. Also note that the stateless container nodes can also be scaled independently to handle increased query load.

Configuring match-phase (retrieval)¶

This section will contain important considerations for the retrieval-phase of a RAG application in Vespa.

The goal of the retrieval phase is to retrieve candidate documents efficiently, and maximize recall, without exposing too many documents to ranking.

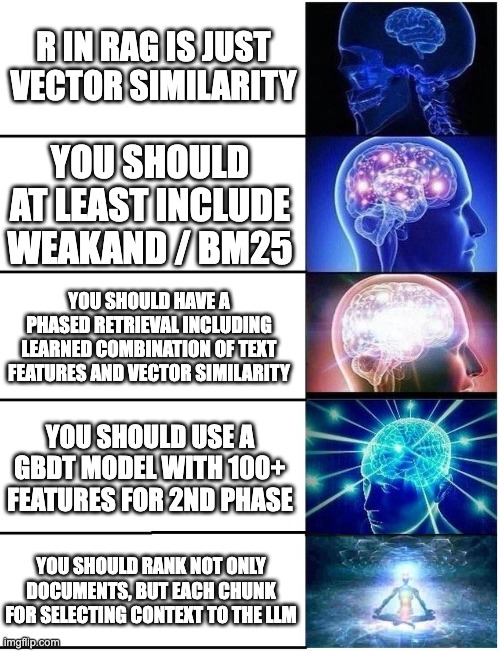

Choosing a Retrieval Strategy: Vector, Text, or Hybrid?¶

As you could see from the schema, we create and index both a text representation and a vector representation for each chunk of the document. This will allow us to use both text-based features and semantic features for both recall and ranking.

The text and vector representation complement each other well:

- Text-only → misses recall of semantically similar content

- Vector-only → misses recall of specific content not well understood by the embedding models

Our recommendation is to default to hybrid retrieval:

select *

from doc

where userInput(@query) or

({label:"title_label", targetHits:1000}nearestNeighbor(title_embedding, embedding)) or

({label:"chunks_label", targetHits:1000}nearestNeighbor(chunk_embeddings, embedding))

In generic domains, or if you have fine-tuned an embedding model for your specific data, you might consider a vector-only approach:

select *

from doc

where rank({targetHits:10000}nearestNeighbor(embeddings_field, query_embedding, userInput(@query)))

Notice that only the first argument of the rank-operator will be used to determine if a document is a match, while all arguments are used for calculating rank features. This mean we can do vector only for matching, but still use text-based features such as bm25 and nativeRank for ranking.

Note that if you do this, it makes sense to increase the number of targetHits for the nearestNeighbor-operator.

For our sample application, we add three different retrieval operators (that are combined with OR), one with weakAnd for text matching, and two nearestNeighbor operators for vector matching, one for the title and one for the chunks. This will allow us to retrieve both relevant documents based on text and vector similarity, while also allowing us to return the most relevant chunks of the documents.

select *

from doc

where userInput(@query) or

({targetHits:100}nearestNeighbor(title_embedding, embedding)) or

({targetHits:100}nearestNeighbor(chunk_embeddings, embedding))

Choosing your embedding model (and strategy)¶

Choice of embedding model will be a trade-off between inference time (both indexing and query time), memory usage (embedding dimensions) and quality. There are many good open-source models available, and we recommend checking out the MTEB leaderboard, and look at the Retrieval-column to gauge performance, while also considering the memory usage, vector dimensions, and context length of the model.

See model hub for a list of provided models ready to use with Vespa. See also Huggingface Embedder for details on using other models (exported as ONNX) with Vespa.

In addition to dense vector representation, Vespa supports sparse embeddings (token weights) and multi-vector (ColBERT-style) embeddings. See our example notebook of using the bge-m3 model, which supports both, with Vespa.

Vespa also supports Matryoshka embeddings, which can be a great way of reducing inference cost for retrieval phases, by using a subset of the embedding dimensions, while using more dimensions for increased precision in the later ranking phases.

For domain-specific applications or less popular languages, you may want to consider finetuning a model on your own data.

Consider binary vectors for recall¶

Another decision to make is which precision you will use for your embeddings. See binarization docs for an introduction to binarization in Vespa.

For most cases, binary vectors (in Vespa, packed into int8-representation) will provide an attractive tradeoff, especially for recall during match-phase.

Consider these factors to determine whether this holds true for your application:

- Reduces memory-vector cost by 5 – 30 ×

- Reduces query and indexing cost by 30 ×

- Often reduces quality by only a few percentage points

txt

field binary_chunk_embeddings type tensor<int8>(chunk{}, x) {

indexing: input text | chunk fixed-length 1024 | embed | pack_bits | attribute | index

attribute { distance-metric: hamming }

}

If you need higher precision vector similarity, you should use bfloat16 precision, and consider paging these vectors to disk to avoid large memory cost. Note that this means that when accessing this field in ranking, they will also need to be read from disk, so you need to restrict the number of hits that accesses this field to avoid performance issues.

txt

field chunk_embeddings type tensor<bfloat16>(chunk{}, x) {

indexing: input text | chunk fixed-length 1024 | embed | attribute

attribute: paged

}

For example, if you want to calculate closeness for a paged embedding vector in first-phase, consider configuring your retrieval operators (typically weakAnd and/or nearestNeighbor, optionally combined with filters) so that not too many hits are matched. Another option is to enable match-phase limiting, see match-phase docs. In essence, you restrict the number of matches by specifying an attribute field.

Consider float-binary for ranking¶

In our blueprint, we choose to index binary vectors of the documents. This does not prevent us from using the float-representation of the query embedding though.

By unpacking the binary document chunk embeddings to their float representations (using unpack_bits), we can calculate the similarity between query and document with slightly higher precision using a float-binary dot product, instead of hamming distance (binary-binary)

Below, you can see how we can do this:

txt

rank-profile collect-training-data {

inputs {

query(embedding) tensor<int8>(x[96])

query(float_embedding) tensor<float>(x[768])

}

function chunk_emb_vecs() {

expression: unpack_bits(attribute(chunk_embeddings))

}

function chunk_dot_prod() {

expression: reduce(query(float_embedding) * chunk_emb_vecs(), sum, x)

}

function vector_norms(t) {

expression: sqrt(sum(pow(t, 2), x))

}

function chunk_sim_scores() {

expression: chunk_dot_prod() / (vector_norms(chunk_emb_vecs()) * vector_norms(query(float_embedding)))

}

function top_3_chunk_text_scores() {

expression: top(3, chunk_text_scores())

}

function top_3_chunk_sim_scores() {

expression: top(3, chunk_sim_scores())

}

}

Use complex linguistics/recall only for precision¶

Vespa gives you extensive control over linguistics. You can decide match mode, stemming, normalization, or control derived tokens.

It is also possible to use more specific operators than weakAnd to match only close occurrences (near/ onear), multiple alternatives (equiv), weight items, set connectivity, and apply query-rewrite rules.

Don’t use this to increase recall — improve your embedding model instead.

Consider using it to improve precision when needed.

Evaluating recall of the retrieval phase¶

To know whether your retrieval phase is working well, you need to measure recall, number of total matches and the reported time spent.

We can use VespaMatchEvaluator from the pyvespa client library to do this.

For this sample application, we set up an evaluation script that compares three different retrieval strategies, let us call them "retrieval arms":

- Semantic-only: Uses only vector similarity through

nearestNeighboroperators. - WeakAnd-only: Uses only text-based matching with

userQuery(). - Hybrid: Combines both approaches with OR logic.

Note that this is only generic suggestion for and that you are of course free to include both filter clauses, grouping, predicates, geosearch etc. to support your specific use cases.

It is recommended to use a ranking profile that does not use any first-phase ranking, to run the match-phase evaluation faster.

The evaluation will output metrics like:

- Recall (percentage of relevant documents matched)

- Total number of matches per query

- Query latency statistics

- Per-query detailed results (when

write_verbose=True) to identify "offending" queries with regards to recall or performance.

This will be valuable input for tuning each of them.

Run the cells below to evaluate all three retrieval strategies on your dataset.

ids_to_query = {query["query_id"]: query["query_text"] for query in queries}

relevant_docs = {

query["query_id"]: set(query["relevant_document_ids"])

for query in queries

if "relevant_document_ids" in query

}

from vespa.evaluation import VespaMatchEvaluator

from vespa.application import Vespa

import vespa.querybuilder as qb

import json

from pathlib import Path

def match_weakand_query_fn(query_text: str, top_k: int) -> dict:

return {

"yql": str(

qb.select("*").from_(VESPA_SCHEMA_NAME).where(qb.userQuery(query_text))

),

"query": query_text,

"ranking": "match-only",

"input.query(embedding)": f"embed({query_text})",

"presentation.summary": "no-chunks",

}

def match_hybrid_query_fn(query_text: str, top_k: int) -> dict:

return {

"yql": str(

qb.select("*")

.from_(VESPA_SCHEMA_NAME)

.where(

qb.nearestNeighbor(

field="title_embedding",

query_vector="embedding",

annotations={"targetHits": 100},

)

| qb.nearestNeighbor(

field="chunk_embeddings",

query_vector="embedding",

annotations={"targetHits": 100},

)

| qb.userQuery(

query_text,

)

)

),

"query": query_text,

"ranking": "match-only",

"input.query(embedding)": f"embed({query_text})",

"presentation.summary": "no-chunks",

}

def match_semantic_query_fn(query_text: str, top_k: int) -> dict:

return {

"yql": str(

qb.select("*")

.from_(VESPA_SCHEMA_NAME)

.where(

qb.nearestNeighbor(

field="title_embedding",

query_vector="embedding",

annotations={"targetHits": 100},

)

| qb.nearestNeighbor(

field="chunk_embeddings",

query_vector="embedding",

annotations={"targetHits": 100},

)

)

),

"query": query_text,

"ranking": "match-only",

"input.query(embedding)": f"embed({query_text})",

"presentation.summary": "no-chunks",

}

match_results = {}

for evaluator_name, query_fn in [

("semantic", match_semantic_query_fn),

("weakand", match_weakand_query_fn),

("hybrid", match_hybrid_query_fn),

]:

print(f"Evaluating {evaluator_name}...")

match_evaluator = VespaMatchEvaluator(

queries=ids_to_query,

relevant_docs=relevant_docs,

vespa_query_fn=query_fn,

app=app,

name="test-run",

id_field="id",

write_csv=False,

write_verbose=False, # optionally write verbose metrics to CSV

)

results = match_evaluator()

match_results[evaluator_name] = results

Evaluating semantic... Evaluating weakand... Evaluating hybrid...

import pandas as pd

df = pd.DataFrame(match_results)

df

| semantic | weakand | hybrid | |

|---|---|---|---|

| match_recall | 1.00000 | 1.0000 | 1.00000 |

| avg_recall_per_query | 1.00000 | 1.0000 | 1.00000 |

| total_relevant_docs | 51.00000 | 51.0000 | 51.00000 |

| total_matched_relevant | 51.00000 | 51.0000 | 51.00000 |

| avg_matched_per_query | 100.00000 | 88.7500 | 100.00000 |

| total_queries | 20.00000 | 20.0000 | 20.00000 |

| searchtime_avg | 0.06275 | 0.0330 | 0.04395 |

| searchtime_q50 | 0.03200 | 0.0290 | 0.03750 |

| searchtime_q90 | 0.06400 | 0.0511 | 0.08500 |

| searchtime_q95 | 0.10055 | 0.0703 | 0.08800 |

Tuning the retrieval phase¶

We can see that all queries match all relevant documents, which is expected, since we use targetHits:100 in the nearestNeighbor operator, and this is also the default for weakAnd(and userQuery). By setting targetHits lower, we can see that recall will drop.

In general, you have these options if you want to increase recall:

- Increase

targetHitsin your retrieval operators (e.g.,nearestNeighbor,weakAnd). - Improve your embedding model (use a better model or finetune it on your data).

- You can also consider tuning HNSW parameters, see docs on HNSW.

Conversely, if you want to reduce the latency of one of your retrieval 'arms' at the cost of a small trade-off in recall, you can:

- Tune

weakAndparameters. This has potential to 3x your performance for theweakAnd-parameter of your query, see blog post.

Below are some empirically found default parameters that work well for most use cases:

txt

rank-profile optimized inherits baseline {

filter-threshold: 0.05

weakand {

stopword-limit: 0.6

adjust-target: 0.01

}

}

See the reference for more details on the weakAnd parameters.

These can also be set as query parameters.

- As already mentioned, consider binary vectors for your embeddings.

- Consider using an embedding model with less dimensions, or using only a subset of the dimensions (e.g., using Matryoshka embeddings).

First-phase ranking¶

For the first-phase ranking, we must use a computationally cheap function, as it is applied to all documents matched in the retrieval phase. For many applications, this can amount to millions of candidate documents.

Common options include (learned) linear combination of features including text similarity features, vector closeness, and metadata. It could also be a heuristic handwritten function.

Text features should include nativeRank or bm25 — not fieldMatch (it is too expensive).

Considerations for deciding whether to choose bm25 or nativeRank:

- bm25: cheapest, strong significance, no proximity, not normalized.

- nativeRank: 2 – 3 × costlier, truncated significance, includes proximity, normalized.

For this blueprint, we opted for using bm25 for first phase, but you could evaluate and compare to see whether the additional cost of using nativeRank is justified by increased quality.

Collecting training data for first-phase ranking¶

The features we will use for first-phase ranking are not normalized (ie. they have values in different ranges). This means we can't just weight them equally and expect that to be a good proxy for relevance.

Below we will show how we can find (learn) optimal weights (coefficients) for each feature, so that we can combine them into a ranking-expression on the format:

a * bm25(title) + b * bm25(chunks) + c * max_chunk_sim_scores() + d * max_chunk_text_scores() + e * avg_top_3_chunk_sim_scores() + f * avg_top_3_chunk_text_scores()

The first thing we need to is to collect training data. We do this using the VespaFeatureCollector from the pyvespa library.

These are the features we will include:

txt

rank-profile collect-training-data {

match-features {

bm25(title)

bm25(chunks)

max_chunk_sim_scores

max_chunk_text_scores

avg_top_3_chunk_sim_scores

avg_top_3_chunk_text_scores

}

# Since we need both binary embeddings (for match-phase) and float embeddings (for ranking) we define it as two inputs.

inputs {

query(embedding) tensor<int8>(x[96])

query(float_embedding) tensor<float>(x[768])

}

rank chunks {

element-gap: 0 # Fixed length chunking should not cause any positional gap between elements

}

function chunk_text_scores() {

expression: elementwise(bm25(chunks),chunk,float)

}

function chunk_emb_vecs() {

expression: unpack_bits(attribute(chunk_embeddings))

}

function chunk_dot_prod() {

expression: reduce(query(float_embedding) * chunk_emb_vecs(), sum, x)

}

function vector_norms(t) {

expression: sqrt(sum(pow(t, 2), x))

}

function chunk_sim_scores() {

expression: chunk_dot_prod() / (vector_norms(chunk_emb_vecs()) * vector_norms(query(float_embedding)))

}

function top_3_chunk_text_scores() {

expression: top(3, chunk_text_scores())

}

function top_3_chunk_sim_scores() {

expression: top(3, chunk_sim_scores())

}

function avg_top_3_chunk_text_scores() {

expression: reduce(top_3_chunk_text_scores(), avg, chunk)

}

function avg_top_3_chunk_sim_scores() {